User-generated content sparks meaningful conversations and helps build a more engaged community. But while free speech is crucial for our democratic society, we must consider its limits.

Trolls, bots, and scams make the comment section unpleasant and unsafe, taking away from the whole experience of community engagement. Content moderation is key to addressing this critical problem. It helps you make the right decisions to maintain a positive and safe online environment for users.

Read on to learn how to implement an effective content moderation process for your online community.

What is content moderation & why does it matter?

Content moderation is the process of monitoring and reviewing what people post online to ensure it follows a brand’s posting guidelines and standards.

Here’s how it works: You hire a content moderator to check messages, comments, and other user-generated content like images, video, and audio. Their goal is to:

- Give genuine replies to genuine accounts, whether they’re followers, customers, or just random people.

- Remove mean comments from trolls, and stop spam accounts that spread lies about your business.

Why is content moderation important?

Social media can be a tough place. Without moderation, your brand’s website and social media channels could look bad. People may think your company doesn’t care about what people say or to keep a nice online space for customers, making them not want to support you anymore.

In contrast, moderating content keeps your brand safe online while enhancing your marketing efforts.

Who needs a content moderation strategy?

Several organizations can benefit from a content moderation strategy that ensures their online platforms remain safe and aligned with their brand identity.

Here’s a closer look:

Businesses and brands

From small startups to big corporations, any business that’s on social media or has a website should have content moderation to keep its online communities respectful and safe. And match their brand values to protect their brand reputation.

Online platforms and communities

Next on the list are websites, forums, and social media platforms like Reddit and YouTube that let users share content. Good content moderation makes sure these platforms offer a positive user experience and comply with legal regulations regarding harmful content, such as hate speech or explicit material.

Ecommerce platforms

Ecommerce sites and mobile apps rely on user reviews and comments to build trust and credibility among shoppers. Moderating content here ensures these reviews and comments are genuine and helpful for effective ecommerce email marketing. It also stops fake or misleading reviews that could hurt the shop’s reputation.

Gaming and virtual communities

Online games and virtual worlds often let users interact with each other. Moderating content here is vital to keep the environment friendly and safe. It stops bullying, makes sure everyone follows the rules, and protects younger players from exposure to inappropriate content and online predators.

Educational institutions and online learning platforms

Schools, colleges, and online learning sites need content moderation too. It keeps the learning environment respectful and productive. Moderators can watch discussions and messages to stop cheating, bullying, or sharing inappropriate stuff. This helps teachers focus on teaching in a safe space.

Why content moderation can be so challenging

Content moderators should be prepared for the following challenges:

- Scale: Expect a huge volume of content on your channels every minute, especially if you have a marketing localization strategy in place. Even with the advanced algorithms and tools, moderators spend a lot of time going through text, images, and videos, making it tough to spot and address problematic material quickly.

- Subjectivity: Deciding if the content is appropriate depends on context and personal views. What may be acceptable in one context could be offensive or harmful in another. Moderators need to consider cultural norms, societal values, and linguistic nuances and stay consistent in their decisions.

- Content diversity: Content comes in various forms, including text, images, videos, and links. Each format presents its own challenges, and therefore, needs different approaches to moderation. This also makes it difficult to develop automated systems that can effectively moderate the different types of content and simplify content operations.

- Legal and ethical concerns: Moderators have to make choices that could have legal or moral effects. It’s tough to balance letting people say what they want while protecting others, especially with sensitive or controversial topics.

- User expectations: People have different ideas about how content should be handled. Some want strict rules, while others want more freedom. Finding a middle ground that makes both sides happy is hard.

- Anonymity and pseudonymity: Online, people can act differently because no one knows who they are. This makes way for trolls, bots, and malicious agents to do bad things like spreading lies or being mean to others, making it harder for moderators to stop them.

- Psychological Impact: Moderators often see upsetting content like violence or hate speech, which can affect their mental health. You must provide them with adequate support and resources to cope with what they see.

5 popular content moderation methods

There are multiple content moderation methods, each with its advantages and challenges. Your choice depends on factors like content type, platform goals, and resource availability.

#1. Manual pre-moderation

In manual pre-moderation, every piece of user-generated content is reviewed by a moderator before it appears on the site. The moderator decides whether to publish, reject, or edit the content based on site guidelines.

Manual pre-moderation gives you good control over content quality, helping to spot scams or bad content, especially in sensitive industries like online dating. But it might slow down content uploads and cost more due to needing trained moderators.

#2. Manual post-moderation

With manual post-moderation, content goes live right away and is reviewed afterward by moderators. Users get instant satisfaction, and it’s good for handling less sensitive issues like sorting or duplicates. However, there’s a risk of harmful content going live before moderation, which may lead to offended users or negative publicity.

#3. Reactive moderation

Reactive moderation relies on users flagging or reporting inappropriate content. Moderators then review reported content and take necessary action.

While cost-efficient, relying on user-generated reports to filter out upsetting content lacks site content control and may allow harmful content to stay up until reported.

#4. Distributed moderation

Distributed moderation lets the community vote on the published content. It decentralizes moderation, with highly voted content rising to the top and lowly voted content being hidden or removed.

This empowers the community to moderate content and works well for engaged users. However, it offers limited control over moderation outcomes and is less effective for sites liable for content.

#5. Automated moderation

Automated moderation uses algorithms to filter content quickly and affordably based on preset rules. The result? Improved user experience with instant content visibility. The only catch is that it carries the risk of false positives or negatives. Human moderators have to periodically review flagged content to ensure accuracy.

How to flesh out your own content moderation strategy

A successful content moderation strategy crafted by skilled SOP writers addresses risks, protects users, and fosters a positive online environment. However, you must tailor your approach to fit your platform’s unique requirements.

Here are some quick tips to get you started:

#1. Define your moderation objectives

What are you trying to achieve through content moderation? Treat your answer as the guiding principle for designing your strategy.

Consider objectives such as:

- Ensuring user safety and fostering a positive online community.

- Protecting your brand reputation and maintaining trust among users.

- Following legal regulations and industry standards.

#2. Understand your audience

Study your audience carefully to understand who uses your platform, their age, what they like, and how they act online. This way, you can adjust how you moderate to fit their needs.

Suppose your platform caters to a younger audience. In this case, you’ll need to protect them from cyberbullying and inappropriate content. Knowing what they like will also help you make the website look better and pick the best ways to communicate with them.

#3. Identify potential risks

Look into what problems your platform might face. For example, as an online store, you’re likely to encounter fake reviews, scams, or fake products.

To start, think about what users make, how they talk to each other, and other dangers like spam or bad people online. This helps you pick the right way to moderate to solve these problems.

#4. Choose moderation methods

Choose a suitable moderation method based on your objectives, audience, and risk assessment.

Depending on the nature of your platform and the volume of user-generated content, you can also combine manual and automated moderation. For instance, you could use automatic filters to flag trolls or spam. Then, human moderators can review it for accuracy.

#5. Set clear guidelines and policies

Next, create comprehensive guidelines and policies outlining acceptable and unacceptable behaviors, language, and content on your platform. Be sure to clearly communicate these guidelines to users to set expectations and ensure compliance.

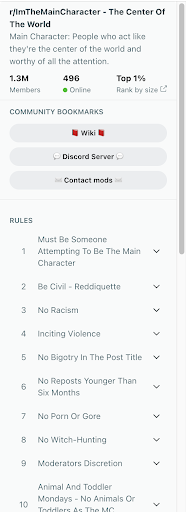

Here are the community guidelines for a Reddit community:

#6. Implement suitable moderation tools

Moderation tools streamline the moderation process and enhance efficiency. This may include content filtering software, reporting systems, and AI-powered moderation algorithms.

For instance, you can use keyword-based filters to automatically flag potentially inappropriate comments for review. Similarly, sentiment analysis tools help identify and address negative or abusive language in user posts.

#7. Train moderators effectively

Train moderators thoroughly on platform guidelines, techniques, and tools to enforce policies and handle various scenarios. This includes topics like identifying hate speech and resolving conflicts.

Note that training sessions should be ongoing to keep moderators updated on evolving trends and best practices.

#8. Monitor and evaluate performance

Regularly review and update your moderation strategy to address emerging challenges and opportunities. Monitor KPIs like user satisfaction and response times to adjust moderation tactics or implement new measures to combat issues like spam or fraudulent activity.

Be prepared to revise moderation guidelines or policies in response to changes in user behavior or community dynamics.

Get, set, moderate

Start content moderation early to tackle scalability challenges and avoid misinterpreting good content. Assess your content and user behavior to determine the best moderation approach, and establish clear rules and guidelines for consistency among moderators.

In addition to community and social moderation, you can leverage link-building to strengthen your brand’s digital presence. VH Info can be your growth and brand-building partner, providing dedicated link-building experts who develop an ideal strategy and target premium websites to build a strong backlink portfolio.

Get in touch to learn more.