Search engines are the gateway to the vast information available on the internet.

To provide relevant search results, they rely on complex indexing algorithms that discover, analyze, and store web page data. As a SaaS company, understanding how these algorithms work is important for optimizing your website’s visibility and attracting organic traffic.

In this comprehensive guide, VH Info dives deep into the world of search engine indexing algorithms, providing actionable insights to help you improve your SaaS link-building strategy.

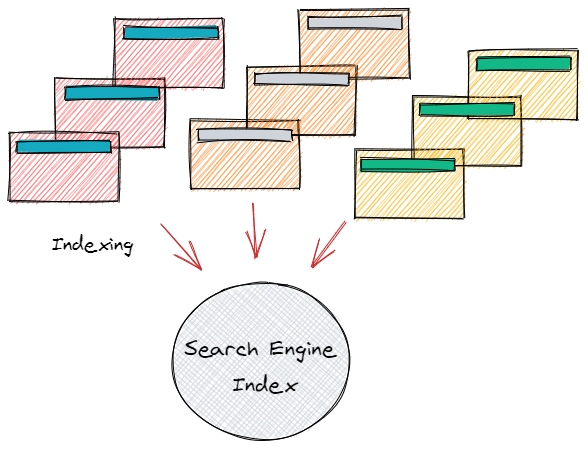

What is Search Engine Indexing?

Search engine indexing is the process by which search engines organize information before a search query occurs. The purpose is to quickly and accurately identify relevant web pages for a given search query.

How Search Engines Discover and Crawl Web Pages?

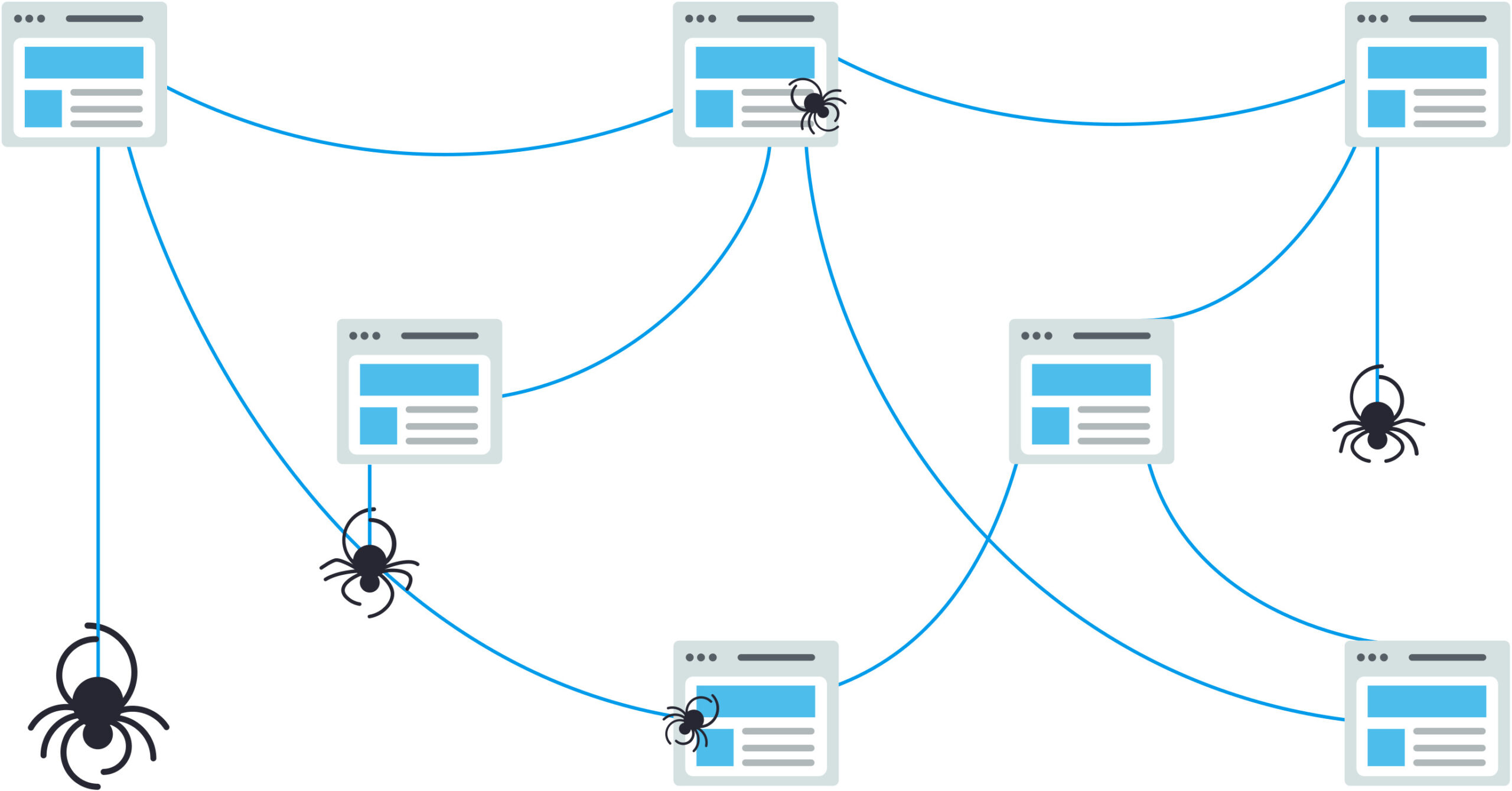

Search engines use web crawlers, also known as spiders or bots, to discover new and updated content on the internet. These crawlers follow links from one page to another, indexing the content along the way.

The Role of Web Crawlers in Search Engine Indexing Algorithms

Web crawlers are an essential component of search engine indexing algorithms. They systematically browse the internet, following links and indexing the content of each page they visit. Crawlers also help search engines update their index when a web page changes.

Challenges of Crawling the Vast Web

The internet is a massive and ever-growing entity, presenting significant challenges for web crawlers. They must navigate through billions of web pages, handle duplicate content, and determine which pages are worth indexing in the crawling process. Search engines use various techniques, such as prioritizing popular pages and limiting the crawl depth, to overcome these challenges.

How Search Engines Index Web Pages?

Once a web crawler discovers a page, the search engine performs several steps to index its content.

Parsing and Analyzing Page Content

The first step in indexing a web page is parsing and analyzing its content. This involves breaking down the page into its constituent parts and identifying the key elements.

- Text Analysis: Search engines extract and analyze the textual content of a web page, including titles, headings, and body text. They use natural language processing techniques to understand the meaning and context of the content.

- Image and Multimedia Indexing: In addition to text, search engines also index images, videos, and other multimedia content. They use techniques like optical character recognition (OCR) and image recognition to understand and categorize visual content.

- Handling Duplicate Content: Duplicate content is a common issue on the web. Search engines use algorithms to identify and filter out duplicate or near-duplicate pages to ensure the best user experience and maintain the quality of their index.

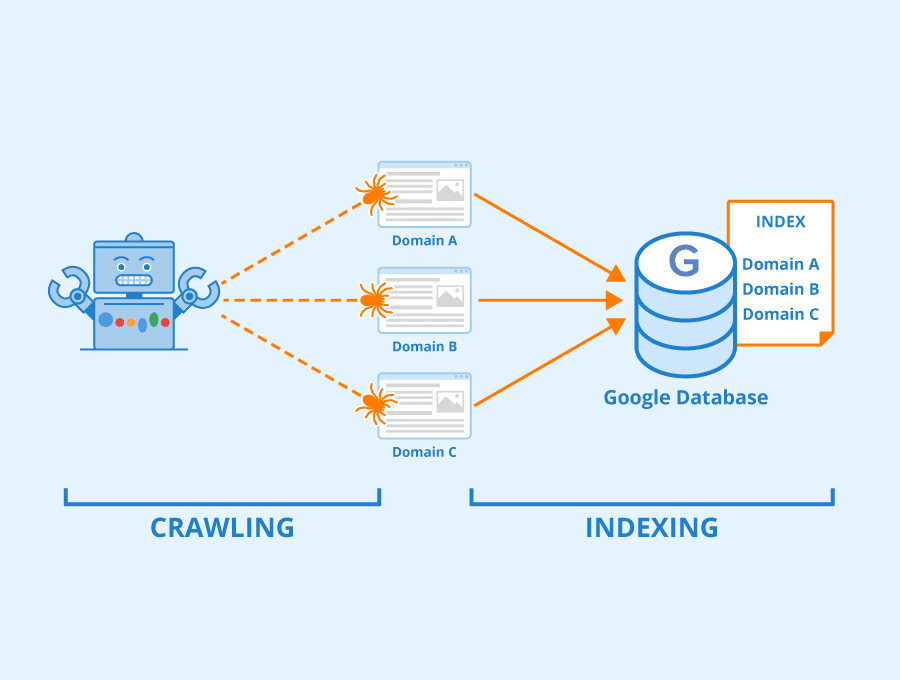

Storing Web Page Data in the Index

After analyzing a web page, search engines store its data in a massive database called an index. The index is designed to allow for fast and efficient retrieval of relevant pages for a given search query.

- Inverted Index Structure: An inverted index is a data structure that allows for quick lookup of web pages containing specific keywords. It maps each keyword to a list of documents that contain it, enabling fast retrieval of relevant results.

- Compressed Storage Techniques: To efficiently store and process vast amounts of web page data, search engines employ various compression techniques. These techniques reduce storage requirements and improve the performance of the indexing and retrieval process.

How Does a Search Engine Index a Site?

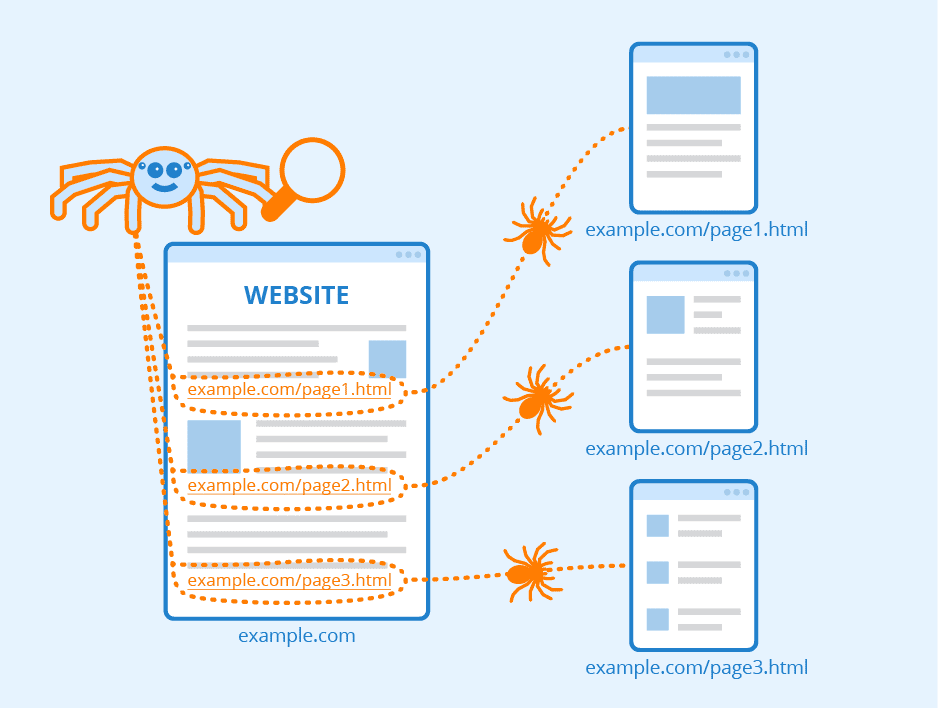

Search engines follow a multi-step process to index a website:

- Discovery: Web crawlers find the site through links from other sites or by directly visiting the URL.

- Crawling: The crawler navigates through the site’s pages, following internal links and discovering new content.

- Indexing: The search engine analyzes and stores the content of each page in its index.

- Retrieval: When a user performs a search query, the search engine retrieves relevant pages from its index and ranks them based on various factors like user behaviour, search experience etc.

4 Tools For Search Engine Indexing

Several tools can help website owners optimize their sites for search engine indexing:

- Sitemaps: A sitemap is an XML file that lists all the pages on a website. It helps search engines discover and crawl the site more efficiently.

- Google Search Console: Google Search Console is a free tool that allows website owners to monitor and optimize their site’s presence in Google search results. It provides valuable insights into indexing status, crawl errors, and search performance.

- Alternative Search Engine Consoles: Other search engines, such as Bing and Yandex, offer similar tools to help website owners optimize their sites for indexing and search visibility.

- Robots.txt: The robots.txt file is a text file that instructs search engine crawlers which pages or sections of a website they should or should not index. It helps control the indexing process and manage the crawl budget.

How Do Indexing Algorithms Interpret Web Content?

Search engine indexing algorithms use various techniques to understand and interpret web page content:

- Natural Language Processing (NLP): NLP techniques help search engines understand the meaning and context of textual content.

- Entity Recognition: Algorithms identify and extract entities, such as people, places, and things, from web pages to better understand the content.

- Sentiment Analysis: Sentiment analysis techniques determine the emotional tone of a web page, which can be a ranking factor.

- Topic Modeling: Topic modeling algorithms identify the main themes and topics of a web page, helping search engines categorize and understand the content.

Key Search Engine Indexing Algorithms

Several key ranking algorithms form the foundation of modern search engine indexing:

PageRank Algorithm

Developed by Google co-founder Larry Page, the PageRank search engine algorithm measures the importance and authority of web pages based on the quality and quantity of links pointing to them.

How PageRank Measures Page Importance?

PageRank works on the premise that a web page is important if other important pages link to it. The algorithm assigns a score to each page based on the number and quality of inbound links.

The Mathematics Behind PageRank

PageRank uses a complex mathematical formula that takes into account factors such as the number of inbound links, the PageRank of the linking pages, and the number of outbound links on each linking page.

Term Frequency and Inverse Document Frequency Algorithm (TF-IDF)

TF-IDF is a statistical measure used to evaluate the importance of a word in a document within a collection of documents.

Calculating TF-IDF Scores

The TF-IDF score is calculated by multiplying two components:

- Term Frequency (TF): The frequency of a word in a document.

- Inverse Document Frequency (IDF): A measure of how rare a word is across all documents in the collection.

Words with high TF-IDF scores are considered more important and relevant to a specific document.

HITS Algorithm

The Hyperlink-Induced Topic Search (HITS) algorithm, also known as Hubs and Authorities, is a link analysis algorithm that rates web pages based on their authority and hub value.

Hubs and Authorities

- Hubs: Web pages that link to many other relevant pages.

- Authorities: Web pages that are linked to many relevant hubs.

The HITS algorithm assigns a hub score and an authority score to each web page based on the quality and quantity of its inbound and outbound links.

Iterative Calculations

The hub and authority scores are calculated iteratively, with each iteration updating the scores based on the scores of the linked pages. The process continues until the scores converge to stable values.

Other Important Indexing Algorithms

Several other algorithms play important roles in search engine indexing:

Suffix Trees and Arrays

Suffix trees and arrays are data structures used for efficient string searching and pattern matching. They help search engines quickly find relevant pages based on search queries.

Latent Semantic Indexing

Latent Semantic Indexing (LSI) is a technique that identifies relationships between terms and concepts in a collection of documents. It helps search engines understand the semantic meaning of web pages and improve the relevance of search results.

Measuring Search Engine Indexing Performance

Search engines use various metrics to evaluate the performance and effectiveness of their indexing algorithms:

Index Coverage and Freshness Metrics

- Index Coverage: The percentage of discoverable web pages that are included in the search engine’s index.

- Index Freshness: How quickly the search engine discovers and indexes new or updated content.

Retrieval Speed and Efficiency

Search engines measure the speed and efficiency of their indexing and retrieval processes to ensure a smooth user experience. Factors such as query response time and resource utilization are closely monitored.

Evaluating Relevance of Search Results

The ultimate goal of search engine indexing is to provide users with the most relevant results for their search queries. Search engines use various techniques, such as click-through rates and user engagement metrics, to evaluate the relevance of their search results and continuously improve their algorithms.

The Future of Search Engine Indexing

As the internet continues to evolve, search engine indexing algorithms must adapt to new challenges and opportunities:

- Indexing the Rapidly Growing Web: With the exponential growth of web content, search engines need to develop more efficient and scalable indexing techniques to keep up with the increasing volume of data.

- Advancements in AI and Natural Language Processing: Artificial intelligence and natural language processing technologies are revolutionizing search engine indexing. These advancements enable search engines to better understand user intent, context, and the semantic meaning of web content.

- Personalization and User-Specific Indexing: Search engines are increasingly focusing on personalization, tailoring search results to individual users based on their search history, location, and other factors. This trend is likely to shape the future of search engine indexing, with algorithms becoming more user-centric and context-aware.

Best Practices For Optimizing Pages For Indexing

To ensure your SaaS website is properly indexed by search engines, follow these best practices:

- Create high-quality, original content that provides value to your target audience. Avoid duplication in content to optimize pages for indexing, and use an online plagiarism checker to spot it for timely eradication.

- Use descriptive, keyword-rich titles and meta descriptions to help search engines understand your page content.

- Implement a clear and logical site structure with intuitive navigation and internal linking.

- Optimize your site’s loading speed and mobile-friendliness to improve user experience and search engine rankings.

- Submit an XML sitemap to search engines and use the robots.txt file to control crawling and indexing.

- Regularly monitor your site’s indexing status and performance using tools like Google Search Console.

- Build high-quality backlinks from authoritative websites to improve your site’s visibility and authority.

FAQ’s:

What is the Main Purpose of Search Engine Indexing Algorithms?

The main purpose of search engine indexing algorithms is to organize and store web page data efficiently, enabling fast and accurate retrieval of relevant results for user search queries.

How Frequently Do Search Engines Update Their Indexes?

Search engines continuously update their indexes as they discover new content or changes to existing pages. The frequency of updates varies depending on factors such as the popularity and importance of the website, the crawl budget allocated to it, and the search engine’s indexing capacity.

How Does Updating Content Affect Indexing and Rankings?

Updating your website’s content regularly can positively impact its indexing and rankings. Search engines favor fresh, relevant content and may crawl and index your site more frequently if you consistently publish high-quality updates. However, it’s essential to ensure that your content updates are meaningful and provide value to your audience.

Can Website Owners Control Which Pages Get Indexed?

Yes, website owners can control which pages are indexed by search engines using various methods:

- Robots.txt: Use the robots.txt file to specify which pages or sections of your site should not be crawled by search engine bots.

- Noindex meta tag: Add a noindex meta tag to the HTML head of a page to instruct search engines not to index that specific page.

- Canonical tags: Use canonical tags to indicate the preferred version of a page when multiple versions exist, helping to avoid duplicate content issues.

How Can You Check if a Page Has Been Indexed?

You can check if a page has been indexed by searching for the page’s URL in a search engine. If the page appears in the search results, it has been indexed.

Additionally, tools like Google Search Console provide detailed information about your site’s indexing status, including the number of pages indexed, any indexing errors, and the date of the last crawl.

Another useful tool for checking indexing status is the URL Inspection tool, which allows you to see the status of individual pages and request indexing for any parts of your site that may have been removed from the index.

If you are performing local SEO (search engine optimization) work, it is important to also claim, verify, and optimize a free Google My Business Listing for the business’s physical location or service area. This can greatly improve localized search results and drive more traffic to the business.

Can Social Media Signals Impact Search Engine Indexing?

While social media signals, such as likes, shares, and comments, do not directly impact search engine indexing, they can indirectly influence your site’s visibility and authority. Social media engagement can drive traffic to your site, increasing its popularity and potentially attracting more inbound links. These factors can contribute to better search engine rankings and faster indexing of your content, resulting in higher visibility in search engine results.

Conclusion

Understanding search engine indexing algorithms is essential for SaaS companies looking to improve their online visibility and attract organic traffic. By creating high-quality content, optimizing your site structure, and leveraging tools like sitemaps and Google Search Console, you can help search engines efficiently discover, crawl, and index your web pages.

At VH Info, we specialize in providing actionable insights and strategies for SaaS link building. Our team of experts stays up-to-date with the latest developments in search engine indexing algorithms, ensuring that our client’s websites are optimized for maximum visibility and performance.

Use the tips provided in this guide, and come to VH Info for links to enhance your website’s ranking, attract additional visitors, and expand your online business.